A webinar unveiling how GoReact is combining artificial intelligence and authentic feedback to empower instructors and coaches to make a bigger impact with fewer resources, while accelerating skill mastery for all learners.

Erin Grubbs:

We’re going to get started. So hello and welcome to our webinar today. We’re thrilled that you’re joining us and hope that you enjoy the presentation and walk away prepared to inspire your students with this new AI feature. I’m Erin Grubbs, head of marketing here at GoReact, and I’ll be moderating today’s session. For those of you not familiar with GoReact and why this release is so important to us, GoReact is a skill mastery and assessment solution that’s taken our 10 years of experience in helping higher ed institutions with student skill development, and we believe that these new features are going to help even more with accelerating skill mastery in some new ways. So before we begin, I’m going to run through a few points of housekeeping. Today’s event will last about 30 to 40 minutes. That’s going to include about 20 to 25 minutes of discussion and about 10 or 15 minutes for Q&A.

We are recording today’s presentation, so if you need to hop off before we finish or you want to share the recording with a colleague, we will be emailing the recording a little bit later today. We do want today’s webinar to be as interactive as possible. So throughout the presentation, please share your questions. To do that, you can submit your questions using the Q&A function and we’ll answer as many as we can in today’s session. You’ll also see that chat function. You’ll please use that to introduce yourself and tell us what school you’re with and your role there, and if you have any links or relevant resources that you think are important to share with other attendees, please do so in that chat section. Make sure your visibility is set to everyone and if you experience any technical difficulties, please use the chat to reach out. Without further ado, I’m happy to be joined today by our presenter, Matthew Short, enterprise client success manager here at GoReact. So I’m going to hand it over to Matthew to get us started.

Matthew Short:

Excellent. Thank you so much, Erin. Thank you all so much for joining today. To discover more about the GoReact AI assistant that we are introducing into our skill development platform. We aspire to elevate and accelerate skill mastery by empowering educators and learners with cutting edge AI features that enhance their ability to provide actionable feedback and self-direct the learning process. With our AI assistant, we’re aiming to support the key stakeholders involved in the process of skill development. For educators the GoReact AI assistant provides a base level of actionable data and insights that allow you the opportunity to focus your time and energy more so on personalized and targeted feedback. It allows your feedback to factor in your knowledge of the individual learner themselves and your experience and subject matter expertise within your own discipline. Some of you that may already know, I’m a father of twin boys and a stepdaughter, which means I have real life practical experience of being asked to manage and deal with more things that I physically have the hands to do and or the mental capacity to do so effectively.

I like to think of this experiential learning when discussing the AI assistant. It is difficult to provide individualized time, energy, and focus when there are so many individuals that are reliant on our knowledge and subject matter expertise within any given area. We see the GoReact AI assistant as a metaphorical extra set of hands to aid you in this endeavor. By providing a baseline of data analytics and feedback that students can review it allows you the time to provide feedback and guidance based on your knowledge, your experience within your discipline, where your field is, where your field is going, and also allows you to incorporate your direct relationship with the students to make sure you’re providing feedback that you know is germane irrelevant based on that relationship. The assistant is truly intended to help shoulder that load that it takes to help learners grow and develop into their best selves.

We also feel that the GoReact AI assistant is providing significant benefits directly to your learners as well. They’re able to receive immediate feedback and analytics on their own performance that allows them to initiate that feedback and review cycle themselves even while they await that more personalized feedback from their instructors, their mentors, or even their peers. As your students are going into their professional careers, the ability to self-teach, self-assess, self-reflect on your own performance is going to be an invaluable skill. Leveraging the GoReact AI assistant, they can start to receive some immediate feedback that allows them to initiate and drive forward with self-correction, self-improvement, self-learning that is a skill and valuable component that will benefit them in their professional careers going forward.

So with these pieces in mind, what I’m going to do now, I’m going to start sharing my screen and we’re going to actually start taking a look at some of the new features that you can expect to see with our AI capabilities. So real quickly, I’m going to share my desktop and hopefully that is up for you guys to see and periodically as I’m going through the system, I’ll zoom in and zoom out. My intention is not to make this an eye test in case you haven’t been to the eye doctor anytime recently. So I’ll make some adjustments on the fly as we go forward. So as you can see, I’ve got our typical GoReact platform activity here pulled up and I’ve got a video submission right here that I can pull up and look at.

So I’m going to click on this particular video and real briefly, I’m going to mute that video. I don’t think I shared my audio on Zoom, so you probably didn’t hear anything, but I don’t want that jabbering in my ears I’m going through the pieces here. As you’ll see, it looks like a typical GoReact activity at this point, but what you may note is here in the middle there are a couple of additional toolbars and little options in the menus. First thing I want to show you is this new transcript feature. The GoReact AI assistant is heavily predicated on the transcript that we are hearing in the video.

Our system will listen to the audio track that is being played within the video and it will generate a real active transcript as you can see here on the right. We’ll see as the video’s playing that it will actually move through the transcript and note when that current component or piece in the timeline is showcasing in the screen. But what you also may be able to see is right above the transcript here, there is a search utility. So if there is a specific kind of verbiage or keywords or critical items that you are specifically looking for within a student’s video that can be gleaned from the transcript, you can leverage the search utility here for it to locate instances where that word is being utilized within the transcript.

If there are multiple instances of a singular word or phrase that are being typed into the search bar, like find on your browser if you’re looking for a specific term, you can quickly go through those multiple instances where that word or that phrasing is being identified within the transcript. You’ll get a chance to see this in a little bit when we look at some of the AI analytics that are being added to videos, but also at the top of the screen here, you’ll note within this little transcript expansion arrow here, you also have the ability in certain disciplines to note some of those more soft or communication related skills or look for such as filler words or instances where the students may be hedging with the verbiage that they’re speaking within their videos.

So if I’m looking for those filler words, you’ll see here it detects 11 instances and it will highlight and note those within the transcript that’s showing here. So that transcript will be automatically generated. This is not something you have to enable or turn on when the AI features are enabled for your particular license. That will be an automated option that is available to you and to the student themselves to pull up at any particular point in time.

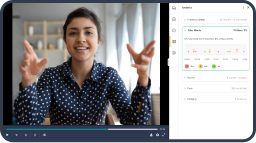

The next AI capability I want to show you guys, so I want to go to the analytics tab. Now, we’ve recently added this analytics tab, excuse me, and what you may have noticed is we’ve moved that traditional feedback graph that you may remember was buried underneath the video players that you had to pop out to actually see it, but you’ll see that it is now contained within the analytics tab here, and at least initially is noting instances where markers are being added within the timeline and also comments. So you can see this visual representation of when markers and comments are being added.

And you’ll see if you hover over those little markers on the screen, it will show you what’s being noted. It shows the time stamp of when that particular comment or marker is noted, and you’ll also have visual representation showing where feedback and markers are grouped together and gives you a visual of, hey, this particular point of the video is pretty well marked up or noted for feedback wise, but I’ve got this gap between these two sections where maybe I need to take another glance there to see if there’s anything of note or consequence or maybe it was truly just like a gap or a lull in the video where nothing was really going on of consequence and that’s why there’s no feedback. But again, giving a nice little visual cue to make that determination yourself.

But even beyond the feedback graph here may notice there’s a couple of other boxes here, something like filler words. Our AI assistant will note anytime when a student or a individual speaking within the video is using a filler word. So you’ll note down here it’s picking up times where it says, oh, so, ah, just, like, any instance where students are using those filler words, and as you can see on the little visual here, it’s noting what filler word was used at what particular point in time. Looks like at this particular juncture there were a number of filler words, so maybe they were a little less confident at that particular section. So student can potentially look at this and see I need to clean up at that particular segment in my presentation, or an instructor could call it out if the student has self-identified or self-corrected at that point.

So you’ll see here it’s going to indicate how many filler words and what percentage of the overall transcript itself was a filler word. In this instance. We’re also taking a look at pauses. Now, sometimes pauses can be deliberate. If I’m delivering an effective presentation or discussing my topic to my classroom, maybe I’m pausing for emphasis at particular points, or maybe it’s something that I don’t recognize or realize I’m doing myself. This can be an opportunity for me to self-assess or for my instructor, my peers, my mentors to note, “Hey, at this particular point there was kind of this really long pause. Was that intentional? I don’t think it was as effective if it wasn’t.”

It’s just an opportunity for you to see how many instances with the video is there a detectable or noticeable pause. Now you’ll see here it’s detecting eight pauses, zero of which are what we categorize as lengthy. A lengthy pause is anything two plus seconds. So if you’ve noted any instances of that, that would take up to capture those particular lengthy pauses. So this is another way to assess those soft skills, those presentation or communication skills to see are these deliberate pauses or are these something I need to correct or adjust in my overall communication or presentation skills?

Pacing. Anybody that’s worked with me knows that I’m pretty verbose and we’ll talk ad nauseum and that might get detected in some of these analytic reports as I do more presentations and recordings. So this will actually be really helpful for me. But what you’ll see here with the pace is it is showing you the overall flow of your speaking patterns during your video or again, maybe it’s a recording of you out in the field and other individuals or other verbiage which is being captured within here. But in instances where you are delivering a speech or presentation, this could be an accurate gauge of your pacing on your words.

So you can see the ebbs and flows here of my speaking patterns or in this individual, this particular speaker. Let me get the video playing again just so there’s something on the screen now, but it gives you that opportunity to see those speaking flows within the presentation itself. Captures here at the top, your average words per minute over the course of the video. We also have a frame of reference here to show that it’s normal to be between 120 and 150 words per minute. So again, you can get that gauge or that sense of, am I speaking a little too quickly? Is my content being received effectively or am I speaking too quickly and it’s just a verbal onslaught on my audience and I really need to taper things back a little bit.

The last automated analytic data piece here that you’ll see is instances of hedging words. Hedging words, those times where you add words or phrases to your speech, your delivery to hedge or add caveats that may impact the authority of what you’re delivering, minimize or take off a little bit of that subject matter expertise that you are really trying to convey when you’re giving a presentation. So our system will also note those instances where students are using hedging words, could, might, should, that can illuminate or make clear to your students, “Hey, I’m taking a little bit off of my presentation because I’m being a little indecisive or a little insecure with what I’m conveying to the audience in these particular instances.”

So you’ll see again, number of instances where that’s occurring and again, what percentage of the overall verbiage within the video that is reflective of. And then again, another frame of reference that it’s normal to have less than 5% of hedging words within the video. So these items that we’ve seen, again here another instance, you do not need to enable these on any activity. These are automatically turned on and available when the GoReact AI assistant is added to your GoReact license and is available to you and to your students to engage with whenever you’re ready.

The last option that I will show you and probably the coolest of them all, is we are also adding the GoReact AI assistant into the commentary and marker utility within GoReact. Now you still have the same capability to leverage your own marker sets as you’ve done in the past utilizing our tool, but our AI assistant will use that transcript and will review it and we will actually leverage AI markers and comments within the video to provide feedback and information to the student directly on things that is picking up within this particular video performance.

So you’ll note just like any other comment, it’s got the AI assistant’s name. So students can quickly see like, oh, this was the AI assistant, it’s not Professor Short. They can see what time in the video that feedback was noted. They can see which of the markers was selected by the AI. And also when you have comments enabled, they will see a piece of feedback here that provides them some suggestion or maybe gives praise or kudos to something they did this particular point in time.

So if I go in here and say it’s like, oh, you use commanding language to emphasize the key message. So if I click on that just like any other marker or comment, it jumps to that point in the video so the student can see what was going on and they can realize it’s like, oh yeah, that was commanding language, that was great. So students can immediately start to review and engage with this commentary before you have even logged into begin looking at their video. Again, giving that opportunity for the student to review, self-improve, self-reflect on the feedback that they’re receiving from the AI assistant within our system.

Now you also may have noticed that each one of these comments has these little thumbs up, thumbs down emojis. We understand that AI is not perfect. We understand AI has room to grow and as we move forward, so we did want to add a feature option in here that students and instructors could potentially indicate, yeah, this was valid, germane, great feedback at this point. You got a gold star. Or you know what, this wasn’t really helpful. This doesn’t really help the student in any way, shape or form. Give it a thumbs down. It’s got a very gladiator feel to it, I guess. But it gives you an opportunity as the consumer of this feedback to note those instances where, hey, this is great. We want to see more of this. Or the opposite, hey, this isn’t the best, this is something that should probably be improved or looked at.

One other item of note for the AI feedback and markers here is you have the opportunity to edit or add additional context to the AI Assistant’s commentary here. Just like any comment that you add within the commentary log yourselves. So if I wanted to, I could add additional comments, I could add other markers that I want to note in a particular point that I’m looking for specifically. So it gives me that option to add additional context or if something is truly not helpful in any way, shape or form, I could even delete it from the transcript or the commentary, excuse me, itself.

So I made a point of emphasis that the other two items that I’ve showed you thus far, that durable transcript and the analytics tab, those are automatically available. AI markers or commentaries are something that you’ll add within the activity setting section. So if I hit back here, I go back to our activity dashboard and I go up to the top of the page and I go in and edit the activity settings. Down in the feedback settings you’ll note there is now this AI assistant section. So right now I’ve got this particular marker selected. If I go into the edit panel here, this is where I can potentially see the different AI markers and feedback options that are available to me.

So I can peruse the list of markers here. I can go in and I can select different disciplines that may be relevant for my particular activities or lessons. And from there I am provided with a list of different markers that our AI tool will be reviewing the video transcript for identifying with the marker and if AI comments has been enabled, will actually leave a comment or a suggestion based on that marker to provide the student with feedback. You can have up to 10 AI markers from our system added within any activity in GoReact. So if I went through and kept on checking more boxes here, those would be items that our AI assistant is actively looking for within the transcript and providing feedback to students on.

Now these are created by GoReact and we have done a pretty good job of adding multiple markers, identifiers in there for a wide variety of disciplines. However, we do want your feedback on opportunities to expand or grow our AI marker set. Within this interface, you will see down here the opportunity to request additional markers. So if you go into your discipline’s marker set or you’re glued to the list and you feel that there are kind of gaps or holes that would really add value to your specific discipline, there’s an opportunity to request those of our product and design team. They can review those markers and see if those are things we can add into the product. So as you are utilizing this tool, please feel free to suggest or add additional markers that you feel may add value within your respective discipline.

So if you wish to use the AI markers and comments, that is something you can set within the activity settings. And again, you still have the same capability to leverage your own markers just like you would on any GoReact activity. So it’s not one or the other. You can literally use both on any GoReact activity. Just note that the AI markers are what are going to be tagged or marked automatically or as traditional markers will still be added to the commentary log by you or by students in instances where they are leveraging or you’re asking them to leverage those marker sets.

So at this point I’ve had the opportunity to show you those three core tenets of our AI assistant within GoReact. We’ve seen that durable transcript, the ability to search through, find key indicators, and also I’ll just highlight as well if there’s a particular part of the transcript you really want to jump to, just like any other comment, if you click on it, sorry, I forgot to [inaudible 00:23:08] the video, you’ll see that it literally will jump to that point in the timeline so you can see that particular moment. So we’ve seen that transcript, we’ve seen those various analytics piece, that traditional feedback graph, filler words, pauses, pacing of the speech, hedging words, again automatically added in there, does not have to be enabled. Students can review and take immediate actions themselves to see those speaking patterns within their own presentation or their own video.

And then last but certainly not least, those AI markers that we’ve set up within the GoReact that you can choose to enable on specific assignments to help provide that initial round of feedback to the students while you have that time, that opportunity to review those videos a little bit more thoroughly in depth based on again, your relationship with the student, what you know they probably need the most help or work or emphasis with, and also allowing you the opportunity to focus more so on your background, your experience within your respective field and provide that really salient germane feedback that they can really only get from those instructors that know their work and know the field so well. So with this particular point, I’m going to pause here. We do have plenty of time to answer questions at this particular point, so I’m going to see if I can pull up chat and the Q&A window here off to the side.

Erin Grubbs:

Yeah, I can help with the questions, Matthew.

Matthew Short:

Excellent, thank you Erin.

Erin Grubbs:

And if there’s any that we need to pass over to product, let me know. I know some of this is new and we’re learning too. So this first one, let’s start with it seems like a lot of people are really interested in the transcript functionality. Will the transcript differentiate between different speakers if there’s multiple speakers or such as teacher or student talking at the same time?

Matthew Short:

Yeah, that’s a great question. At this particular point, it looks like it is truly just any speech on the screen at this point. I’ll definitely clarify with our product team to make sure that that is the correct answer, but at least at this particular moment it is just generating transcript, seemingly regardless of who is speaking at that particular time.

Erin Grubbs:

No, that sounds good. And all of these questions and feedback will definitely go over to the product team. They’re anxious to hear from users and what’s important, so we can do that. Another question on the transcript is, is it downloadable for future analysis?

Matthew Short:

Currently for the initial release, it is not something that can be exported or pulled out of the system though that is feedback that we are hearing and if that changes or that capability is added, we will definitely share with our clients when that is an option or when that is available.

Erin Grubbs:

Okay. Let’s see. A couple questions around other languages and accents and the product. I know we’ve got some information on that for people, so can you talk a little bit about that?

Matthew Short:

Yeah, absolutely. So at this particular point in time, the transcription or the tools within our system are going to be mainly focused on English at this point. So if let’s say foreign language or a global language course or Spanish or French is being spoken, a lot of the analytics or tools within there will not be able to assess or accurately tab or mark those particular items. So at this particular point it is reliant or dependent on English being spoken within that particular transcript or that video that is being recorded.

Erin Grubbs:

Thanks Matthew. Lots of people are excited about the markers that you showed them and I think would a little bit more of a glance at that. Specifically I think we got a request on the teacher preparation markers, so if we can take a look at those.

Matthew Short:

Absolutely.

Erin Grubbs:

And then while you’re doing that, I know you showed that they can request new markers, but if there are ones that they want aligned to their standards or something that they’re doing, would they just use that request new markers tool that you showed them?

Matthew Short:

Yeah, sorry, let me back out of there real quickly. So absolutely. So as you’re reviewing or checking out the different markers within that AI marker prompt, if you are seeing gaps or if there’s anything that might be specific to your respective discipline, again, please feel free to use that request option within the settings here. So if I go back in here, go to my AI assistant. So as you’ll see here, you can select or toggle with the primary use case here, teacher preparation being one of those. So you can go through and see the initial offering that will be available here. You can check off which ones you’d like to use within that respective discipline or primary use case.

But as you’re going through, if you see things that are not identified or not markers within the set, using that request additional marker set in a link to a form that you could submit those, provide additional contact and you can provide contact information so that we can follow up and work with you to see if there is a larger need that that marker set might meet. We can’t necessarily promise personalized or individualized marker sets, but if we do see a larger need, that can be met by adding additional marker sets within here. That’s an opportunity we might be able to explore with you all.

Erin Grubbs:

Thanks Matthew. Another question, and I know this is AI so it’s learning, so hopefully we can answer this one, but how accurate do we think that the AI assistant is with the feedback that it’s providing? Obviously touched on the thumbs up and thumbs down, but.

Matthew Short:

Yeah. So I won’t even venture to begin answering a question where we’re defining or saying what necessarily accuracy may be. I’ll just share in the same context with any AI product that it is based on repetition, the data and information that we’re receiving and it’s also heavily dependent on the engagement we’re from our clients. Our capability to improve and make this more impactful is going to be benefited greatly by you coming in and engaging with the content that is within there. So if you’re seeing great comments left on there, please you, students, make sure you’re noting, hey, this is a great piece of feedback, more of this, please.

In instances where it is not beneficial, where it is not helpful, maybe in your personal judgment is not an accurate piece of feedback, please note those. That information, that context is what is really going to help us improve the AI and make it more helpful and germane as we’re building this out and building additional capabilities in there. So I do believe in truth, but I also understand that there is some semblance of gray and different feedback and what we might describe as accurate, but we want your feedback, we want your engagement on what you feel is helpful or beneficial to your learners as they’re engaging with this content.

Erin Grubbs:

Thanks. We did get a question on GoReact subscriptions and how this is included. Is the essential package containing this or do they need to upgrade? How does that work?

Matthew Short:

Great question. Great question. So the essential GoReact package does not include the AI markers, the analytics. Well, the essential package will include the basic feedback graph, but the other analytics that are contained within there, those are part of the advanced package along with that durable transcript. So the essentials package is pretty much everything that you are used to with GoReact. So if you’ve been using our product for years, the AI components will not be tacked onto that automatically. The advanced package is what will provide the AI components. It also comes with additional benefit such as a larger upload file size cap of 10 gigabytes per individualized video and also provides high definition when you’re using a camera that has high definition.

So you can anticipate with that advanced package a little bit more clarity, high definition to your video recordings. So if the AI components and those items are something you are interested in, we are happy to discuss pricing and making the advanced package available to you and adding it to your license as well if you’re an existing client.

Erin Grubbs:

Yeah, and I believe for current customers, we sent out, I think it was last week, some information on an offer that is available to anybody who is an existing GoReact customer. So check that out. There should be some more information going out, I think, in the next week as well around that offer if you are a GoReact customer. And I think we’ve got time for one more question. So will today’s recording available? Yes, we’ll be emailing this out by the end of the day, so you should be able to get access to that. And we did get a lot of questions around the markers, so I am going to get with the product team and see if we can compile those for everyone so you can see what exists right now currently and send that out either in the follow-up or in a separate email. So we will touch base on that.

But thank you Matthew for this excellent presentation and demo and digging into all those great questions. You provided a lot of valuable information and we’re so excited to have everyone start using the AI assistant. As mentioned, there is that offer for customers, but we’re also offering a free trial of the AI assistant to existing customers from June 3rd to the 30th. So that’ll be automatically in your GoReact account and you’ll be able to turn it on just like Matthew showed you and the assignment and the activities.

For non-customers, if you are interested in doing that trial from June 3rd to the 30th, you can also sign up for a free trial. You would just need to sign up for a GoReact license and that is free during that time period as well. And you’ll be able to use the tool and try it out just like our customers. To get information on that, you can visit the link that we’re about to put in chat if we haven’t already. We’re excited for everyone to get started and really appreciate any feedback that comes from that trial as well. So please make sure you’re using those tools that Matthew showed you in terms of giving feedback back to us on this AI assistant. So thank you for your time, attendees and joining us and making this a very interactive presentation. We hope to see you on a future GoReact webinar and have a great rest of the week. Thanks.